According to a recent statement by OpenAI CEO Sam Altman, the company plans to simplify its AI products with the upcoming release of GPT-5, aiming to provide users with a more streamlined and intuitive experience by eliminating the need for manual model selection.

Unified Intelligence System Goals

OpenAI’s vision for GPT-5 focuses on creating a “unified intelligence” system that seamlessly integrates multiple AI functions. This approach is designed to eliminate the need for users to choose between different models, instead offering a single, powerful AI capable of handling a wide range of tasks. Key goals include:

- Integrating advanced features such as voice interaction, canvas manipulation, search functionality, and deep research capabilities into a unified system.

- Enhancing natural language processing and reasoning abilities to achieve “PhD-level intelligence” in specific tasks.

- Improving multimodal processing for better understanding and generation of responses based on text, images, and potentially video.

- Expanding the context window to handle and remember more information from previous interactions.

- Simplifying the user experience by removing the model selector and providing different intelligence levels across various subscription tiers.

Introduction to Chain-of-Thought Models

The upcoming GPT-5 is expected to introduce advanced Chain-of-Thought (CoT) capabilities, significantly improving its reasoning and problem-solving abilities. This new model may feature enhanced multimodal processing, integrating text, images, and possibly video inputs. Sam Altman hinted that GPT-5 will demonstrate better reasoning skills, make fewer mistakes, and produce more reliable outputs. These CoT advancements aim to guide AI through structured thinking processes, breaking down complex tasks into manageable steps to generate more accurate and coherent responses.

Enhanced Multimodal Processing Capabilities

GPT-5 will revolutionize AI interaction with its enhanced multimodal processing capabilities, a key focus emphasized by Sam Altman. This improvement will enable the model to seamlessly integrate text, image, audio, and video inputs and outputs. Key features include:

- Speech-to-speech capabilities for more natural conversational interactions.

- Improved image processing and generation based on previous successful integrations.

- Video support, marking a major leap in AI’s ability to understand and generate audiovisual content.

- Unified handling of various data types, creating a more cohesive and context-aware AI experience.

These improvements are expected to open up new possibilities for AI applications across industries, from creative content generation to solving more complex problems in fields such as healthcare and education.

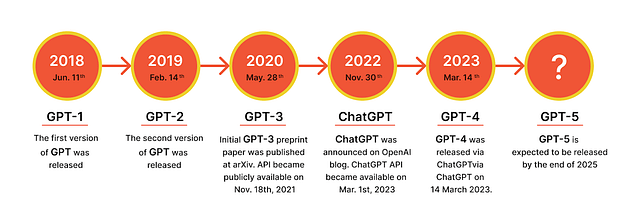

GPT-5 Release Timeline

OpenAI CEO Sam Altman recently announced that GPT-5 will be released in “a few months,” with its predecessor, GPT-4.5, expected to launch in a few weeks. This updated timeline marks a significant acceleration of OpenAI’s development roadmap. Altman emphasized that GPT-4.5 (internally codenamed Orion) will be the company’s “last non-chain-of-thought model.” The upcoming GPT-5 will integrate multiple technologies, including OpenAI’s O3 reasoning model, and feature advanced functionalities like voice interaction, canvas manipulation, and deep research capabilities. This unified approach is designed to streamline OpenAI’s product offerings, providing users with a more intuitive AI experience across different subscription tiers.